LRU

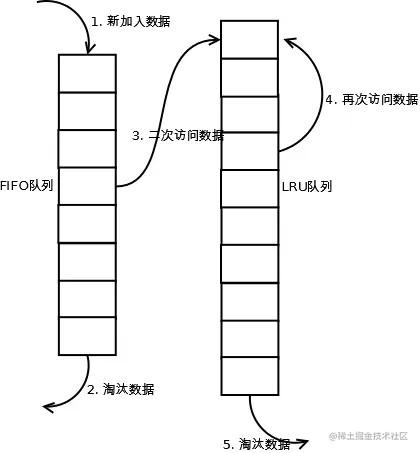

LRU 是一种缓存算法,提供了一种简单而又高效的方式来存储数据。同时自动处理缓存的驱逐策略,即当缓存达到预设的大小限制时,它会自动移除最近最少使用的项。

- 新数据插入到链表头部

- 每当缓存命中(即缓存数据被访问),则将数据移到链表头部;

- 当链表满的时候,将链表尾部的数据丢弃。

Lru-cache

// hybrid module, either works

import { LRUCache } from 'lru-cache'

// or:

const { LRUCache } = require('lru-cache')

// or in minified form for web browsers:

import { LRUCache } from 'http://unpkg.com/lru-cache@9/dist/mjs/index.min.mjs'

// At least one of 'max', 'ttl', or 'maxSize' is required, to prevent

// unsafe unbounded storage.

//

// In most cases, it's best to specify a max for performance, so all

// the required memory allocation is done up-front.

//

// All the other options are optional, see the sections below for

// documentation on what each one does. Most of them can be

// overridden for specific items in get()/set()

const options = {

max: 500,

// for use with tracking overall storage size

maxSize: 5000,

sizeCalculation: (value, key) => {

return 1

},

// for use when you need to clean up something when objects

// are evicted from the cache

dispose: (value, key) => {

freeFromMemoryOrWhatever(value)

},

// how long to live in ms

ttl: 1000 * 60 * 5,

// return stale items before removing from cache?

allowStale: false,

updateAgeOnGet: false,

updateAgeOnHas: false,

// async method to use for cache.fetch(), for

// stale-while-revalidate type of behavior

fetchMethod: async (

key,

staleValue,

{ options, signal, context }

) => {},

}

const cache = new LRUCache(options)

cache.set('key', 'value')

cache.get('key') // "value"

// non-string keys ARE fully supported

// but note that it must be THE SAME object, not

// just a JSON-equivalent object.

var someObject = { a: 1 }

cache.set(someObject, 'a value')

// Object keys are not toString()-ed

cache.set('[object Object]', 'a different value')

assert.equal(cache.get(someObject), 'a value')

// A similar object with same keys/values won't work,

// because it's a different object identity

assert.equal(cache.get({ a: 1 }), undefined)

cache.clear() // empty the cache缺点

LRU 缓存是基于内存的缓存方案,所以在分布式项目中没办法使用,无法做不同机器下的同步